By Matthew Millar R&D Scientist at ユニファ

Summary:

This

blog is looking at finding the best method for future price prediction for

stocks in general. This blog will

look at methods for calculating production that is in current research and uses

in the industry. Also, it will

cover; statistical methods, machine learning, artificial neural networks, and

hybrid models. From the current

research, hybrid models were found to give the best results over pure

statistical methods and pure machine learning and artificial neural network

methodologies.

Introduction:

Stock

market prediction is a method to discover possible future values of a stock. With successful predictions of prices,

higher profits can be gained, but on the other hand with bad predictions can

produce losses. There is a standard

thought that the market does not follow a standard flow, so predictions using

statistics or models would be an impossible task due to the idea that technical

factors cannot show all of the variables that help

shape the movement of the stock market.

On the contrary, the efficient market hypothesis would otherwise

suggest, a stock price already reflects all the information that could affect

any price changes except for unknown information which is always a level of

unpredictability.

Fundamental

analysis looks at the company rather than just the numbers or charts. This involves looking at the past

performance and credibility of its accounts. This approach is mainly used by fund

managers as this is one of the traditional methods that use publicly available

information on the company.

Technical analysis does not care about the company's fundamentals but

mainly look at the trends of the stocks past performance in a time series

analysis. These are the two more

common ways of stock price prediction.

Current trends in price forecasting are the use of Data mining

technologies. The use of Artificial

Neural Networks (ANN), Machine Learning (ML) algorithms, and statistical models

are now being used to help in prediction.

Due

to the increasing amount of data from trades, data mining and data analysis on

this amount of data can be very difficult using standard methods. With the amount of data that is produced

daily, this could be considered on the level of Big Data analytics. Due to the ever-changing values of the

data throughout the day, it is difficult to monitor every stock that is

available, let alone to perform prediction on price movements. This is where the use of algorithms and

models come into play. With these

algorithmic models, predictions can be made with relative accuracy and can give

investors better insight into the actual data's value without all of the excessive amounts of data review.

Goal:

The

goal of this blog is to look at possible methods for prediction using ML, ANN,

and statistical models. Statistical

methods are the most commonly used method for price prediction currently, but

will the use of ML, ANN, or hybrid systems can give a more accurate prediction.

It will continue to look at statistical methods, pure ML, pure ANN, and hybrid models,

a more comprehensive list can be made, and a better idea of which approach

could be better for forecasting purposes.

Methodology Review:

There

are a few emerging methods that are gaining popularity and applications over

the traditional financial approaches.

These methods are based on ML, statistical models, ANN, or a hybrid

model. Some of the proposed models

use a purely statistical method, some use pure ML or ANN, and others use a

hybrid approach combining both statistical and ML or ANN together. Each model has its pros and cons for use

and a problem that solves best.

Statistical Methods:

By

using data analysis, one can predict the closing price of a certain stock. Currently, there are six common methods

or data analysis to make a predictive model. Some of these models are common Stock

price models that are used currently in the stock market and by fund

managers. These five models are

must be in agreement of the movement direction in

order for the price movement to be predicted most accurately. These models are; Typical Price (TP), Chaikin Money Flow Indicator (CMI), Stochastic Momentum

Index (SMI),

Relative Strength Index (RSI), Bollinger Bands (BB),

Moving Average(MA), and Bollinger Signal. By combining these algorithms, a more

accurate prediction can be made by looking at upper and lower bands, if the

price goes above the upper band then that indicates a positive selling point

and if it goes below the lower band it indicates a positive buy point. This method of combining the results of

other models does give a better chance of price change than just one of the

single statistical models (Kannan, Sekar, Sathik,

& Arumugam, 2010).

Machine Learning Artificial Neural

Network Methods:

Text

mining has also been used in stock prices trends, especially for inter day

prices trends. By using text mining

techniques, a 46% chance of knowing if a stock will increase or decrease by

0.5% or remain in the positive and negative range, which was more significant

than a random predictor which only gave around 33% accuracy for stock price

fluctuation prediction. By using a

process of text mining, by gathering press releases and preprocess them into

usable data and categorizing them into different news types. Trading rules can then be derived from

this data for particular stocks (Mittermayer,

2004).

Pure

ANN is used currently for stock prediction as well as analysis. The users have given very reliable

results as ANN are good at working with errors, can use large and complex data,

and can produce useful prediction results.

For forecasting just one stock, there is a lot of interacting input

series that is needed. Each neuron

can represent a decision process.

This will allow for ANN to represent the interaction between the decisions

of everyone in the market. This

will allow for the ANN to completely model a market. ANN is very effective at predicting

stock prices (Kimoto,

Asakawa, Yoda, & Takeoka, 1990; Li, & Ma, 2010),

ANN

is gaining acceptance in the financial sector. There are many techniques and application

that look into using AI in creating prediction

models. One common method is to use

a genetic algorithm (GA) to aid in the training or the ANN for the prediction

of stock prices. The GA, in most

cases, are used for training the network, selecting the feature subset, and

aiding in topology optimization. A GA

can be used to help in the feature discretionary and determination of

connection weights for an ANN (Kim and Ham, 2000).

Hybrid Models:

ML

combined with an AI is a very good combination of two very powerful

methodologies. An ML model can be

used for data mining to define and predict the relationships between both

financial and economic variables.

The examination of the level estimation and classification can be used

for a prediction of future values.

Multiple studies show that by using a classification model, a trading

strategy can generate higher risk-adjusted profits than the traditional buy and

hold strategy as well as the level estimation prediction capability of an ANN

or linear regression (Enke and Thawornwong,

2005). ML is mainly based on

supervised learning which is not appropriate for long term goals. But, by using reinforcement learning ML,

is more suitable for modeling real-world situations much like stock price

prediction. By looking at stock

price prediction as a Markov process, ML with the TD(0)

reinforcement learning algorithm that focuses on learning from experiences

which are combined with an ANN is taught the states of each stock price trend

at given times. There is an issue

with this in that if the prediction period is either very short or very long,

the accuracy decays drastically. So this would only be useful for mid-range prediction for

prices (Lee, 2001). Another Hybrid

model that has given great accuracy (around 77%) is combining a decision tree

and an ANN together. By using the

decision tree to create the rules for the forecasting decision and the ANN to

generate the prediction. This

combination is more accurate than either an ANN or a decision tree separately

(Tsai and Wang, 2009).

Other Useful Models:

Support

Vector Machines have also been used for stock market prediction. SVM does perform better than random guessing

but are out shown by hybrid models.

A combination of a genetic algorithm and an SVM can produce a better

result than even an SVM alone. By

using some technical analysis fields used as input features. This method was used to not only produce

a forecast for the stock that is being looked at as well as any other stock

that has a correlation between each other and the target stock. This hybrid significantly can outperform

a standalone SVM (Choudhry and Garg, 2008).

Discussion:

There

are many ways to produce a future value prediction, though some are slightly

better and more accurate than others.

Statistical analysis is the most common approach to prediction. Linear regression, logistic regression,

time series models, etc... are some of the more common ways of predicting

future values. But,

these methods may not be the best for more complex and dynamic data sets. If the data is not the same type, linear

regression may have poor results.

This is where an ANN or ML model comes in. These can produce a better result which

a higher accuracy than a purely statistical approach as they can work with the

complex systems of a market. In the

pure form, an ANN or ML can produce better accuracy over many statistical

methods for most stock price predictions.

But, by using hybrid ANN, an even more accurate and useful model can be

done. By combining a DT or a GA

with an ANN, a greater accuracy over the two pure methods can be gained.

Reference:

Choudhry, R.,

& Garg, K. (2008). A Hybrid Machine Learning System for Stock Market

Forecasting. World Academy of Science, Engineering and Technology International

Journal of Computer and Information Engineering, 2(3).

Enke, D.,

& Thawornwong, S. (2005). The use of data mining

and neural networks for forecasting stock market returns. Expert Systems

with Applications, 29(4), 927-940.

Kannan, K.,

Sekar, P., Sathik, M.,

& Arumugam, P. (2010). Financial Stock Market Forecast using Data Mining

Techniques. Proceedings of the International MultiConferences

of Engineers and Computer Scientists, 1.

Kim, K.,

& Han, I. (2000). Genetic algorithms approach to feature discretization in

artificial neural networks for the prediction of stock price index. Expert

Systems with Applications, 19(2), 125-132.

Kimoto, T.,

Asakawa, K., Yoda, M., & Takeoka, M. (1990).

Stock market prediction system with modular neural networks. 1990 IJCNN

International Joint Conference on Neural Networks.

Lee, J. W.

(2001). Stock price prediction using reinforcement learning. ISIE 2001.

2001 IEEE International Symposium on Industrial Electronics Proceedings (Cat.

No.01TH8570).

Lin, Tom C.

W., The New Investor. 60 UCLA Law Review 678 (2013); 60 UCLA Law Review 678

(2013); Temple University Legal Studies Research Paper No. 2013-45. Available

at SSRN: https://ssrn.com/abstract=2227498

Li, Y., &

Ma, W. (2010)Applications of Artificial Neural Networks

in Financial Economics: A Survey. 2010 International Symposium on

Computational Intelligence and Design, Computational Intelligence and Design

(ISCID), 2010 International Symposium on, 211.

Mittermayer,

M. (2004). Forecasting Intraday Stock Price Trends with Text Mining

Techniques. E Proceedings of the Hawai'i International Conference on

System Sciences, January 5 – 8, 2004, Big Island, Hawaii.

Tsai,

C. Wang, S. (2009). Stock Price Forecasting by Hybrid Machine Learning

Techniques. Proceedings of the International MultiConference

of Engineers and Computer Scientist 2009 Vol1 IMECS 2009. Vol 1

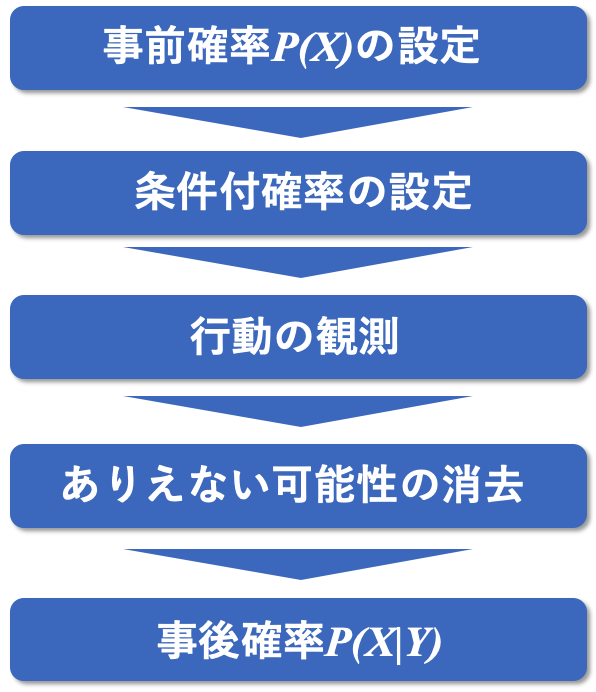

)」割合が10%(0.1)で、「興味ない(

)」割合が90%(0.9)だったとします。そうすると、事前確率は以下のように表せます。

)」割合が80%(0.8)

)」割合が20%(0.2)

)」割合が40%(0.4)

)」割合が60%(0.6)

を求めます。

を求めます。