By Patryk Antkiewicz, backend engineer at Unifa.

In one of Unifa products we provide the functionality called Letter, which, as the name suggests, is a kind of message sent from nursery school to parents of all kids belonging to specific classes. It became necessary to implement a search feature for these Letters, and since our database stores over 1 million records of these I decided to try out one of the popular full text search engines and see how it performs. Open Search seemed to be the best potential candidate since it integrates well with Ruby and AWS.

Running local OpenSearch instance

I started with running the OpenSearch instance in my Docker environment according to the instruction provided here - I added the following two services in my docker-compose.yml. First one is actual OpenSearch node, the other is Dashboard that allows monitoring OpenSearch indexes in the browser (note that password must be set in environment variable).

opensearch-node1: image: opensearchproject/opensearch:latest container_name: opensearch-node1 environment: - cluster.name=opensearch-cluster - node.name=opensearch-node1 - discovery.seed_hosts=opensearch-node1 - cluster.initial_cluster_manager_nodes=opensearch-node1 - bootstrap.memory_lock=true - "OPENSEARCH_JAVA_OPTS=-Xms512m -Xmx512m" - OPENSEARCH_INITIAL_ADMIN_PASSWORD=${OPEN_SEARCH_ADMIN_PASSWORD} ulimits: memlock: soft: -1 hard: -1 nofile: soft: 65536 hard: 65536 volumes: - opensearch-data1:/usr/share/opensearch/data ports: - 9200:9200 - 9600:9600 networks: - opensearch-net opensearch-dashboards: image: opensearchproject/opensearch-dashboards:latest container_name: opensearch-dashboards ports: - 5601:5601 expose: - "5601" environment: OPENSEARCH_HOSTS: '["https://opensearch-node1:9200"]' networks: - opensearch-net

Dedicated volume and network for internal communication are also necessary:

volumes: opensearch-data1: networks: opensearch-net:

Then I started the services:

docker-compose up -d opensearch-node1 opensearch-dashboards

Dashboard is available under http://localhost:5601/ - login credentials are admin and password provided in OPEN_SEARCH_ADMIN_PASSWORD env variable.

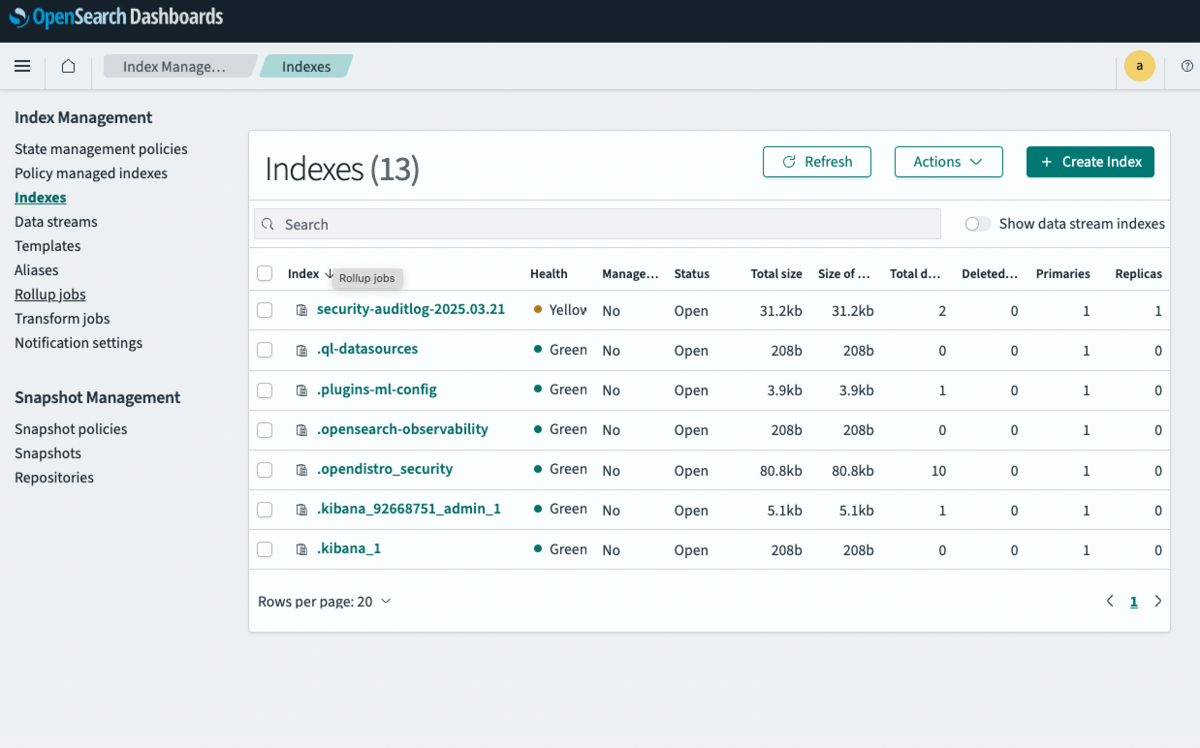

After logging in I can see that there are some system indexes available:

Indexing existing objects

Now time for actual implementation - there is dedicated Ruby gem for OpenSearch, so I started with adding it in my Gemfile: https://github.com/opensearch-project/opensearch-ruby

gem "opensearch-ruby"

The object I want to search is called Letter (stored in Postgres database) and has the following structure (for simplicity I skipped irrelevant columns here):

ID (PK, Integer)

organization_id (Integer)

title (String)

content (String)

scheduled_send_date_time (Datetime)

Search will always occur in the context of specific organization_id (nursery), and I want to perform full text search in title and content. However, before the object is searchable it must be first indexed in OpenSearch, so this was my next task.

In the production database we have over 1 million of such objects, so I created DB dump and imported it into my local Postgres database after masking sensitive data. We are using gem called Global for managing credentials, URLs etc, so I created open_search.yml file with the following content - they will be necessary to connect to OpenSearch instance from the app.

default: &default host: <%= ENV.fetch('OPEN_SEARCH_HOST', 'https://host.docker.internal:9200') %> user: <%= ENV.fetch('OPEN_SEARCH_USER', 'admin') %> password: <%= ENV['OPEN_SEARCH_PASSWORD'] %>

Then I prepared a simple service that will index all Letters that exist in my database.

require 'opensearch' class LetterSearchService def initialize @client = OpenSearch::Client.new( host: Global.open_search.host, user: Global.open_search.user, password: Global.open_search.password, transport_options: { ssl: { verify: false } }, log: true ) end def index_letters indexed = 0 create_index_if_not_exists ActiveRecord::Base.logger.silence do Letter.find_in_batches(batch_size: 1000) do |letters| batch_index_records = letters.map { |letter| letter_indexing_operation(letter) } @client.bulk(body: batch_index_records) indexed += 1000 puts "Indexed #{indexed}" end end end private def create_index_if_not_exists return if @client.indices.exists(index: 'letters') @client.indices.create( index: 'letters', body: { mappings: { properties: { id: { type: 'long' }, organization_id: { type: 'keyword' }, title: { type: 'text' }, content: { type: 'text' }, scheduled_send_date_time: { type: 'date' } } } } ) end def letter_indexing_operation(letter) { index: { _index: 'letters', _id: letter.id, data: { id: letter.id, organization_id: letter.organization_id, title: letter.title, content: letter.content, scheduled_send_date_time: letter.scheduled_send_date_time.strftime("%Y-%m-%dT%H:%M:%S") } } } end end

Logic is pretty straightforward - first I create the client, then I create the index called letters (if it doesn't exist). Finally I query all Letters in my database (in batches of 1000 records) and index them - OpenSearch::Client provides a method called bulk which allows executing multiple operations at the same time. The scheduled_send_date_time attribute will not be used for search, but I store it in the index because I want to show it on my results list.

To start the indexing I executed below command in my project's Docker container (it took about 10 minutes to index 1 million of records.

LetterSearchService.new.index_letters

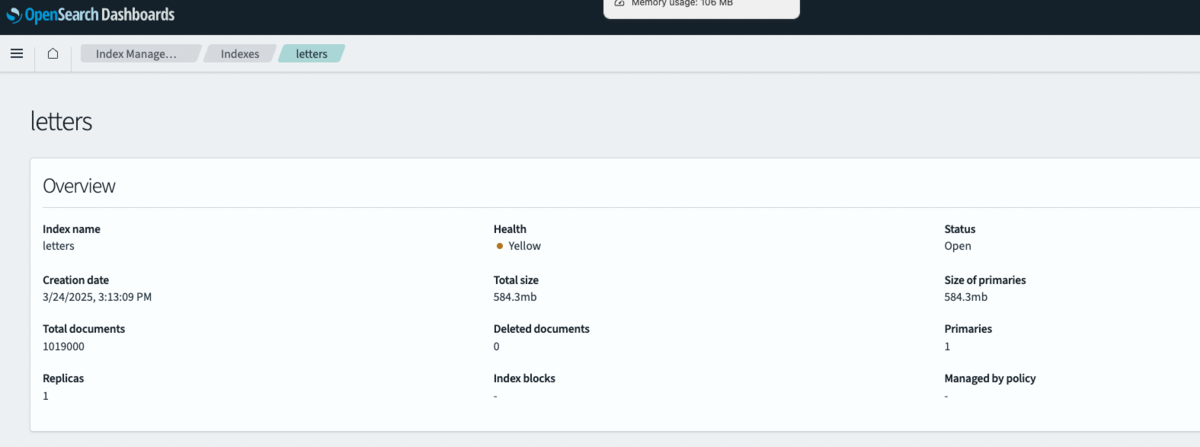

I can confirm in the Dashboard that the data has been properly indexed - it has over 1 million records and the total size is almost 600 MB.

This is an initial indexing for existing dataset, but in real life scenario indexation for new data should be also triggered whenever new record is created - it can be achieved very simply by adding method as below in the LetterSearchService:

def index_letter(letter) @client.index(index: 'letters', id: letter.id, body: { id: letter.id, organization_id: letter.organization_id, title: letter.title, content: letter.content }) end

and calling it after record is successfully persisted.

letter = Letter.save!(...letter_params) LetterSearchService.new.index_letter(letter)

Implementing the search feature

Now we can finally proceed to actual search implementation - I added below methods to my LetterSearchService:

def search_letters(organization_id, text) response = @client.search( body: letter_search_query(organization_id, text), index: 'letters' ) response.dig('hits', 'hits').pluck('_source') rescue StandardError => e Rails.logger.error "Search failed: #{e.message}" [] end private def letter_search_query(organization_id, text) { size: 10, query: { bool: { must: [ { multi_match: { query: text, fields: ['title', 'content'], }, }, { term: { organization_id: { value: organization_id, }, }, }, ], }, }, } end

It utilizes the search function from OpenSearch client, which expects index name and query condition written in specific language (syntax is explained here). In my case I used term (exact match) to filter letters for specific organization and multi_match for full text queries targeting two fields: title and content.

Result is returned in JSON form, and the actual data is available under response["hits"]["hits"]. - here is example:

{ "took": 35, "timed_out": false, "_shards": { "total": 1, "successful": 1, "skipped": 0, "failed": 0 }, "hits": { "total": { "value": 110, "relation": "eq" }, "max_score": 12.256855, "hits": [ { "_index": "letters", "_id": "304850", "_score": 12.256855, "_source": { "id": 304850, "organization_id": 1, "title": "プールについて", "content": "★★★★★★★プール★★★★★★🏊 プール★★★★★★★★★★! ★★★★プール★★★★、プール★★★★★★★★★★★★★プール★★★★★★★★★★★★★★★★", "scheduled_send_date_time": "2023-06-29T17:00:00" } }, { "_index": "letters", "_id": "940971", "_score": 12.05091, "_source": { "id": 940971, "organization_id": 1, "title": "本日の伝達について", "content": "★★★★★★★★★プール★★★★★★★★★★★! プール★★★★★★★★★★🏊 ★★★★★★★プール★★★★★★★★★★★★★★☺️ ★★★★★★★★プール★★★★★★★★★★★★★★★★★★★★★★", "scheduled_send_date_time": "2024-07-22T15:30:00" } } ] } }

Now I want to be able to call the search from a simple HTML form and show the results dynamically and perform new search on new character input.

For this purpose I created a simple Rails controller that calls the client and returns the results:

class Letter::SearchesController < ApplicationController def index if params[:text].present? results = LetterSearchService.new.search_letters( params[:organization_id].to_i, params[:text] ) render json: { results: results } else render json: { results: [] } end end end

And html form (in HAML format) that is calling the above API:

.component.mb-5{ data: { component: 'letter-search-form' } } %ul.nav %li.nav-item = form_with url: letter_searches_path, method: :get, id: "search-form", local: false do |f| = f.hidden_field :organization_id, value: organization_id, data: { role: 'organization-id' } = f.text_field :query, placeholder: "Search...", data: { role: 'query-input' } #results-container .d-none = render 'letter/handlebars/search_results' %div{ data: { placeholder: 'search-results-render'} }

Javascript handles the API response and transform it into result list using Handlebars template:

onmount('[data-component=letter-search-form]', function () { const organizationId = this.querySelector('[data-role=organization-id]').value const queryInput = this.querySelector('[data-role=query-input]') const resultsPlaceholder = this.querySelector('[data-placeholder=search-results-render]') const resultTemplateString = this.querySelector("[data-template=letter-search-result]").innerHTML; const resultTemplate = Handlebars.compile(resultTemplateString) queryInput.addEventListener('keyup', function() { const query = queryInput.value; if (query.length > 2) { // Minimum 3 characters to start search fetchResults(query); } else { resultsPlaceholder.innerHTML = ''; // Clear results if query is too short } }); function fetchResults(query) { fetch(`/letter/searches?text=${encodeURIComponent(query)}&organization_id=${organizationId}`) .then(response => response.json()) .then(data => { displayResults(data.results); }); } function displayResults(results) { resultsPlaceholder.innerHTML = resultTemplate({ results: results }) } })

Handlebars template:

%script{ type: 'text/x-handlebars-template', data: { template: 'letter-search-result' } } :plain <ul class="list-group search-results-list"> {{#each results as |result|}} <li class="list-group-item d-flex justify-content-between align-items-center"> <div class="result-text"> <span class="font-weight-bold result-title">{{result.title}}</span> <span class="text-muted result-content">{{truncate result.content 100}}</span> <div class="text-muted mt-2"> <small>{{formatDate result.scheduled_send_date_time}}</small> </div> </div> <a href="/letters/{{result.id}}" class="btn btn-sm btn-outline-primary details-link">詳細を見る</a> </li> {{/each}} </ul>

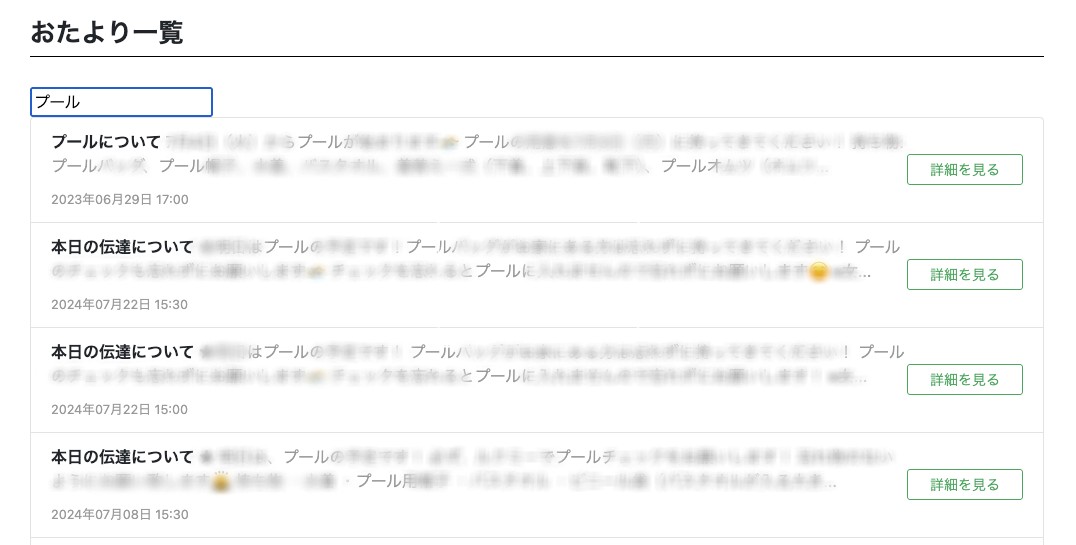

The end result looks as below - after entering at least 3 characters the list of results matching the query pops up immediately. Results are relevant - the phrase プール is present in all shown records.

It is just a simple, experimental implementation without advanced logic and search conditions, but it proved that OpenSearch for large dataset can be integrated in Ruby on Rails app with very little effort.

In above experiment I used local instance of OpenSearch, but it is also available as one of AWS services, so it can be set up relatively easily - detailed pricing is available here.

Unifa is actively recruiting, please check our website for details: