By Vyacheslav Vorona, iOS engineer at UniFa.

Recently the software development community has been abuzz with excitement over the potential of ChatGPT. As a powerful language model trained on vast amounts of text data, ChatGPT has the ability to understand and generate human-like language with astonishing accuracy. This has led many developers to wonder how they can leverage this technology in their own development processes and to seek out ways to integrate ChatGPT into their workflows.

I wasn't an exception and tried exploring the abilities of ChatGPT in various ways from solving LeetCode problems to writing small projects and code documentation with its help. Like many other developers, I quickly stumbled upon some limitations of ChatGPT: whenever the context gets too complex, it starts making mistakes. Often too big ones for ChatGPT to be safely used for production coding.

However, there there is a particular area of our job where I think ChatGPT can be quite useful even at the current stage - automated testing. Even if a mistake made in some unit test slips through the code review, that will not turn into a bug in the actual product and affect the user base.

Also, in case of unit testing we can utilize the concepts of Protocol-Oriented Programming and Dependency Injection to simplify the context (i.e. entity under test) and make it easier for ChatGPT to understand what we want from it.

Let's take a look at how we could achieve that. Spoiler: It will not be ideal, but is still quite promising.

Core Concepts

First things first, let's talk a little about Protocol-Oriented Programming and Dependency Injection. Both were around for years, and you have probably heard of them, but it is better to be clear, right?

- Protocol-Oriented Programming - is a programming paradigm implying that we start coding from defining a set of protocols that act as API contracts between entities in our program. Each protocol describes properties and functionality that an entity needs to implement in order to conform to the protocol. When entities are being implemented in the form of actual classes/structures/etc. they are "masked" from each other behind the protocols, meaning that the actual implementation details can be abstracted away.

The important part here is the fact that we can hide the implementation details of surrounding entities behind the protocols and simplify the context for ChatGPT.

- Dependency Injection - is a fancy way of saying that an object's internal implementation details should not "silently" depend on some entity from outside. Instead, we should explicitly pass (or "inject") that entity to the object during its setup. In combination with Swift protocols, this allows us to easily stub out dependencies and replace them with simplified versions (mocks) that conform to the same protocol.

By injecting dependencies we can eliminate a lot of details that we would otherwise need to provide to ChatGPT in order for it to understand what is going on.

Assembling Robots

Before asking ChatGPT to write test code for us, we need to do some work ourselves and implement a testable object keeping in mind the concepts defined in the previous section.

Let's say we need a service that assembles robots 🤖

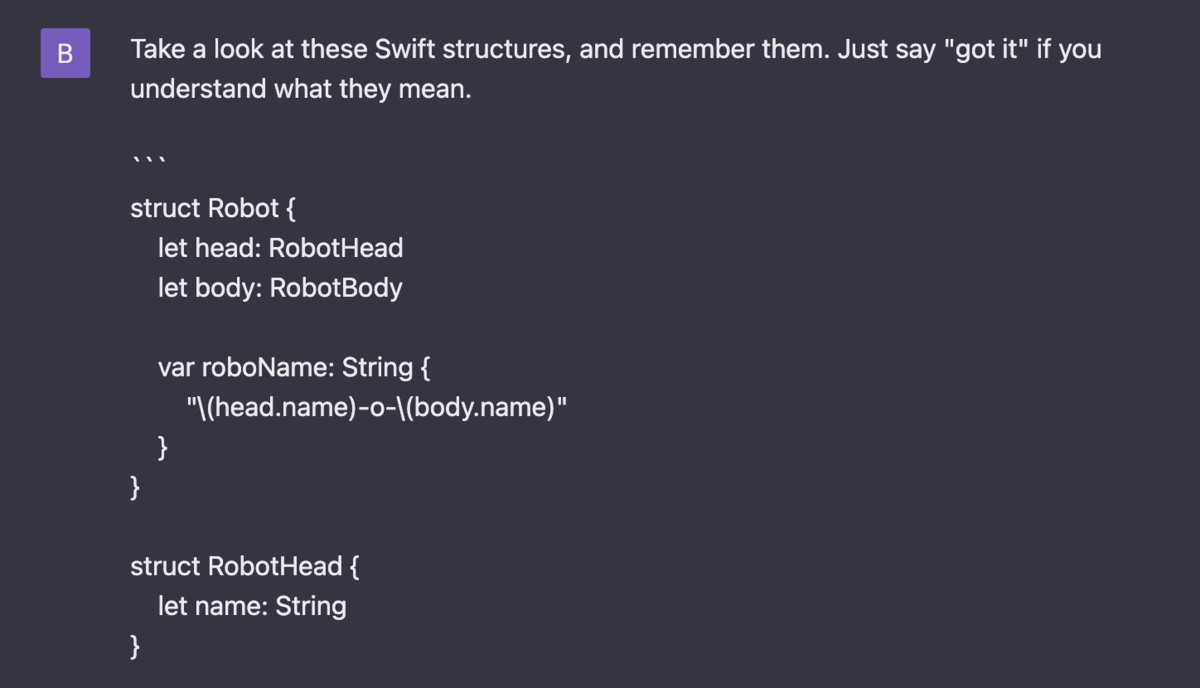

Each robot needs a head and a body. These are the basic entities our service is going to operate.

struct Robot {

let head: RobotHead

let body: RobotBody

var roboName: String {

"\(head.name)-\(body.name)"

}

}

struct RobotHead {

let name: String

}

struct RobotBody {

let name: String

}

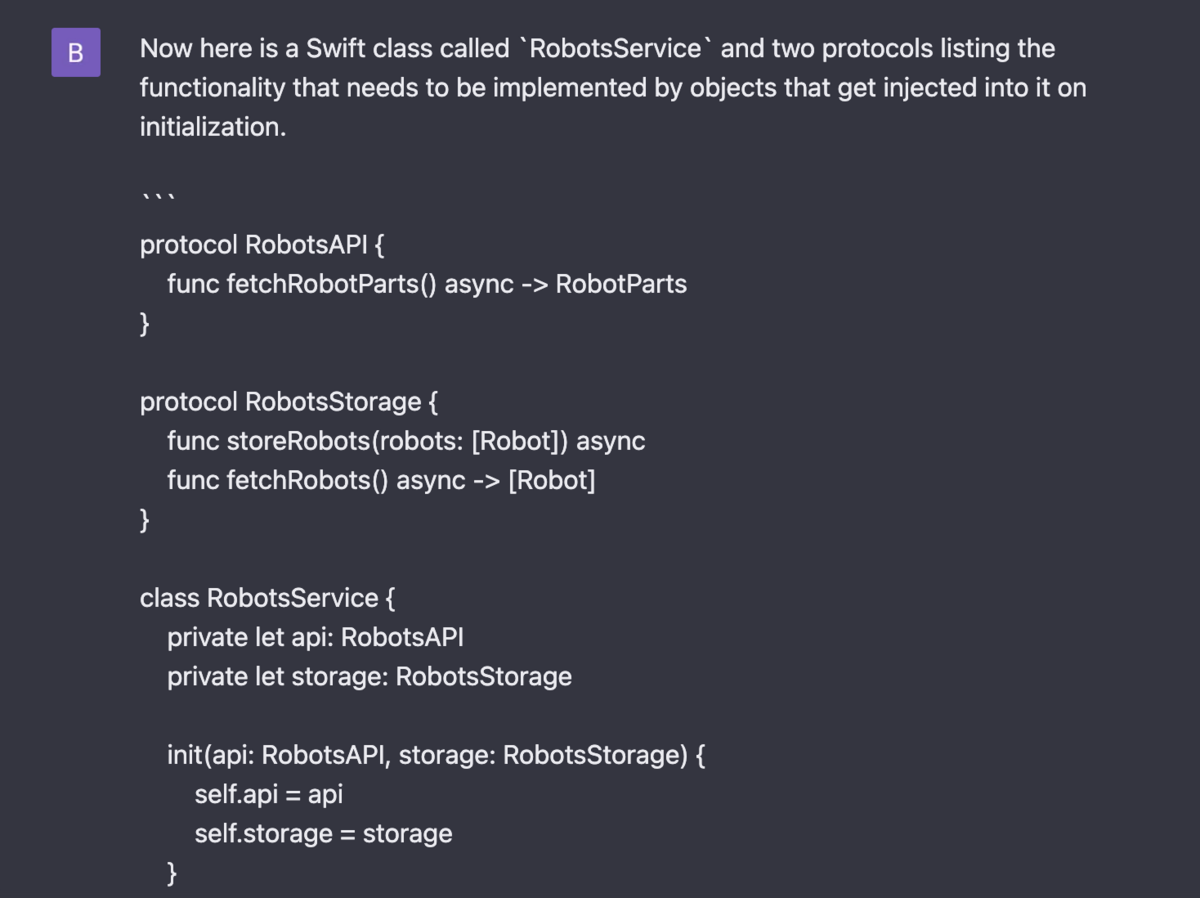

The parts are provided by some API that we will hide from our assembly service behind a protocol:

protocol RobotsAPI {

func fetchRobotParts() async -> RobotParts

}

// This is just a container with robot parts

struct RobotParts {

let heads: [RobotHead]

let bodies: [RobotBody]

}

In a real project, a type implementing RobotsAPI protocol could call a remote API and perform some complex operations under the hood. But the protocol basically says "There has to be an asynchronous method called fetchRobotParts that would return RobotParts, that's it". This means that ChatGPT will be able to abstract away and not care about such details too.

We can also store assembled robots in some storage locally until the moment we need them. The actual implementation of such storage, again, will not be visible from the perspective of the assembly service. The service will only know that it can store assembled robots and then fetch them later.

protocol RobotsStorage {

func storeRobots(robots: [Robot]) async

func fetchRobots() async -> [Robot]

}

Again, there could be a database or any other local storage conforming to the protocol, but we don't even have anything like that implemented in our project, only the protocol.

Finally, we need to implement the actual service that gets dependency-injected with the API and the Storage, assembles the robots and returns them. Here it is:

class RobotsService {

private let api: RobotsAPI

private let storage: RobotsStorage

// 1

init(api: RobotsAPI, storage: RobotsStorage) {

self.api = api

self.storage = storage

}

// 2

func getRobots() async -> [Robot] {

// 3

let storedRobots = await storage.fetchRobots()

guard storedRobots.isEmpty else { return storedRobots }

// 4

let robotParts = await api.fetchRobotParts()

// 5

let robots = assembleRobots(from: robotParts)

// 6

await storage.storeRobots(robots: robots)

return await storage.fetchRobots()

}

// 7

private func assembleRobots(from parts: RobotParts) -> [Robot] {

var index = 0

var robots: [Robot] = []

while index < parts.heads.count, index < parts.bodies.count {

robots.append(.init(

head: parts.heads[index],

body: parts.bodies[index]

))

index += 1

}

return robots

}

}

Let's take a closer look at what is going on here and what we will ask ChatGPT to auto-test for us.

RobotsServicegets injected with objects implementingRobotsAPIandRobotsStorageprotocols on its initialization.- The method returning robots is publicly exposed and is going to be auto-tested.

- Here we check the Storage and if there are robots in it, they are immediately returned.

- Otherwise we call the API to get some robot parts.

- We then assemble robots from the parts we got.

- Store the robots and return them.

- The assembly method just iterates through heads and bodies and assembles all the robots it can.

Explaining the Context to ChatGPT

Now it is time to talk to our young AI-partner and ask it to write some auto-tests.

To do it I first "feed" ChatGPT with all the structures we defined in the very beginning: Robot, RobotHead, RobotBody, and RobotParts (the screenshot is cropped).

Then I let it take a look at the RobotsService.

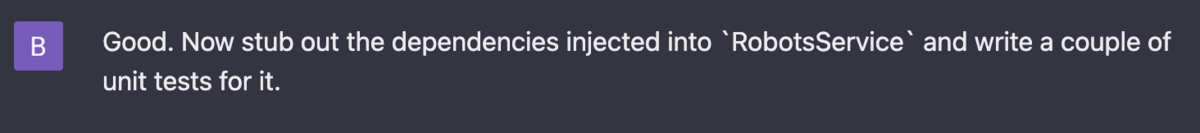

Now when ChatGPT knows what it is dealing with, let's lean back and ask it to write some tests for us.

"Beep-boop, I will take your job" (no)

"You can watch three things endlessly: fire burning, water flowing, and other people working." - Old Russian saying

The beginning is promising. Looking at the protocols we have provided ChatGPT writes two mocks that it intends to use in the tests. It managed to abstract away from potentially complex real-life implementations of those protocols and implement really simple objects that conform to the same protocols.

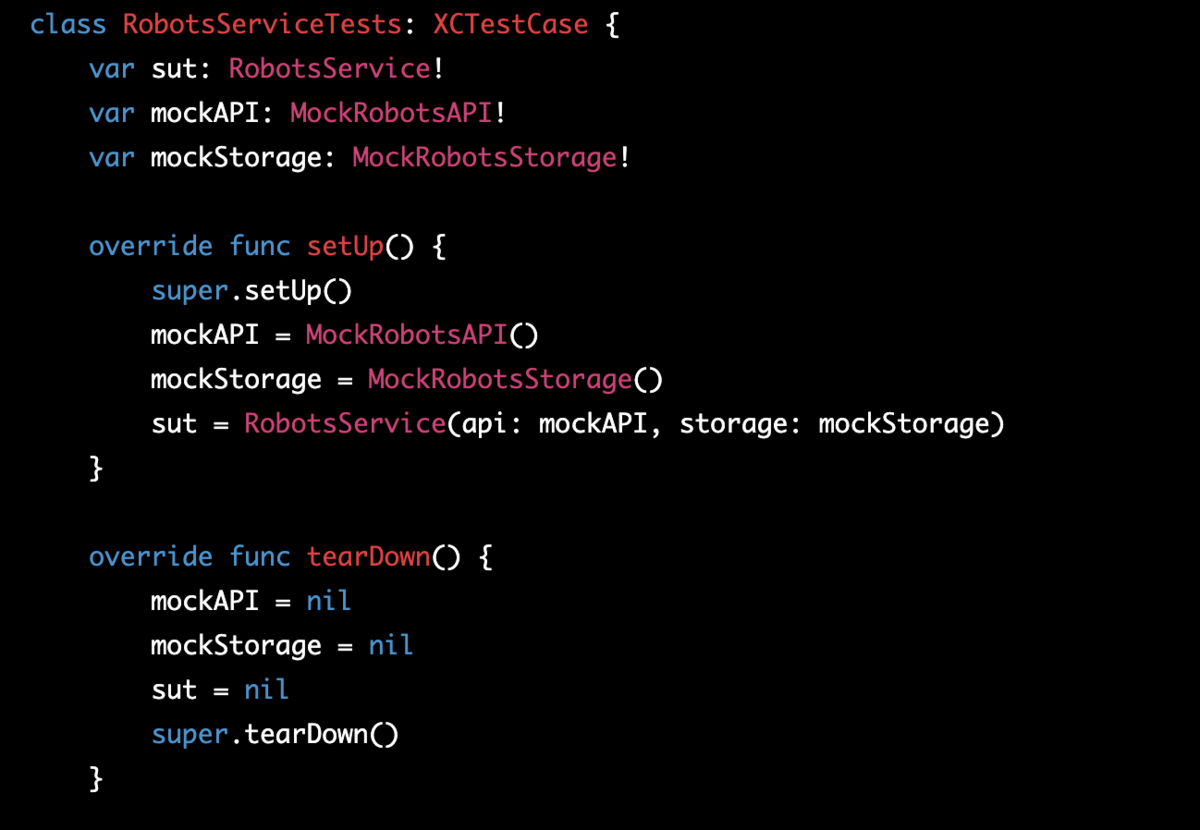

The tests setup looks nice too. ChatGPT properly sets up and resets the state of the test class after each test case and follows the common naming conventions. At the end of setup process, it creates an instance of RobotsService and injects previously created mocks into it. Nice.

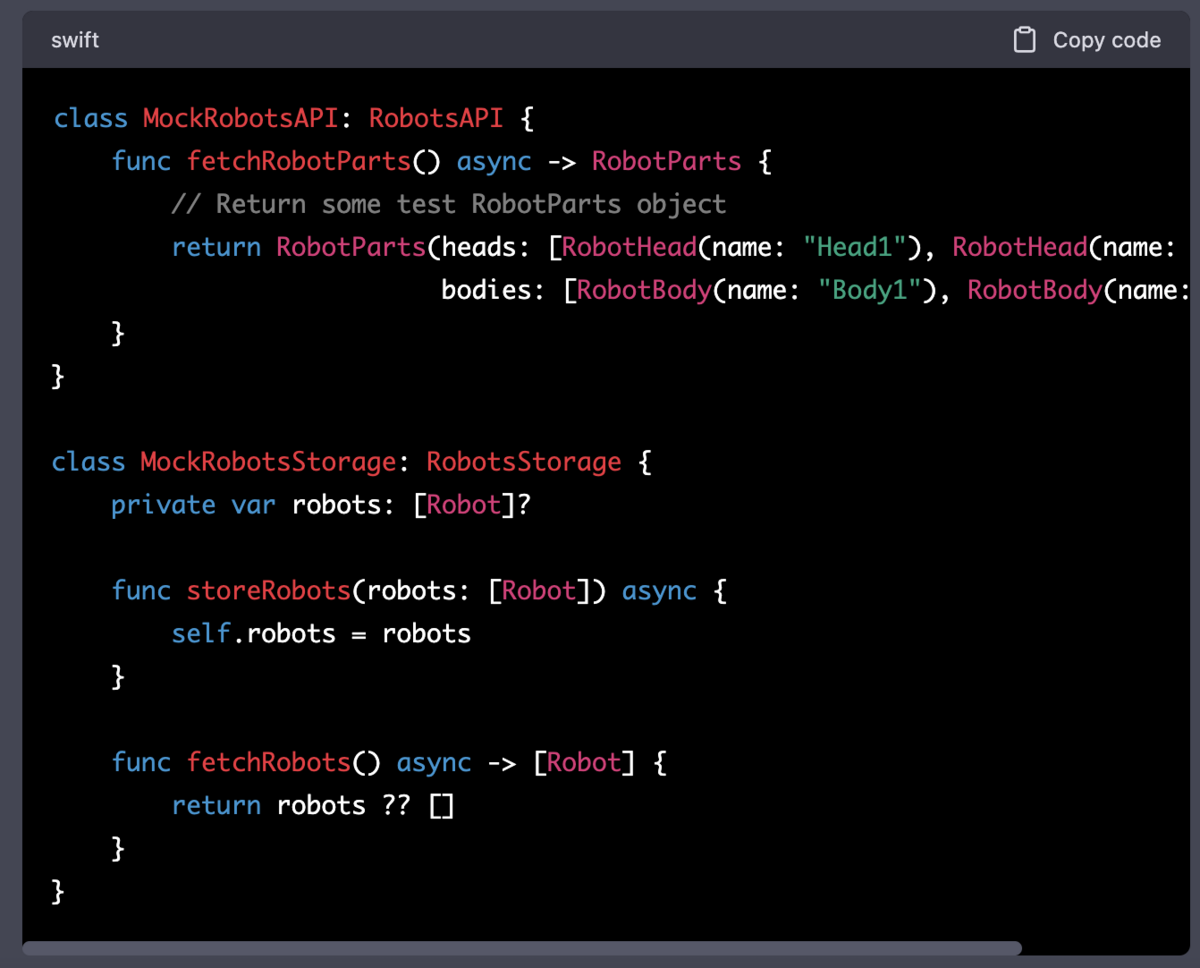

However, when ChatGPT gets to writing the actual tests some problems start to appear. It made similar mistakes in all the tests it provided, so we will take a look at one example here.

func testGetRobots_withExistingRobotsInStorage_returnsStoredRobots() async throws {

// Arrange

let expectedRobots = [Robot(head: RobotHead(name: "Head1"), body: RobotBody(name: "Body1")),

Robot(head: RobotHead(name: "Head2"), body: RobotBody(name: "Body2"))]

mockStorage.storeRobots(robots: expectedRobots) // 1

// Act

let actualRobots = try await sut.getRobots() // 2

// Assert

XCTAssertEqual(actualRobots.map { $0.roboName }, expectedRobots.map { $0.roboName })

}

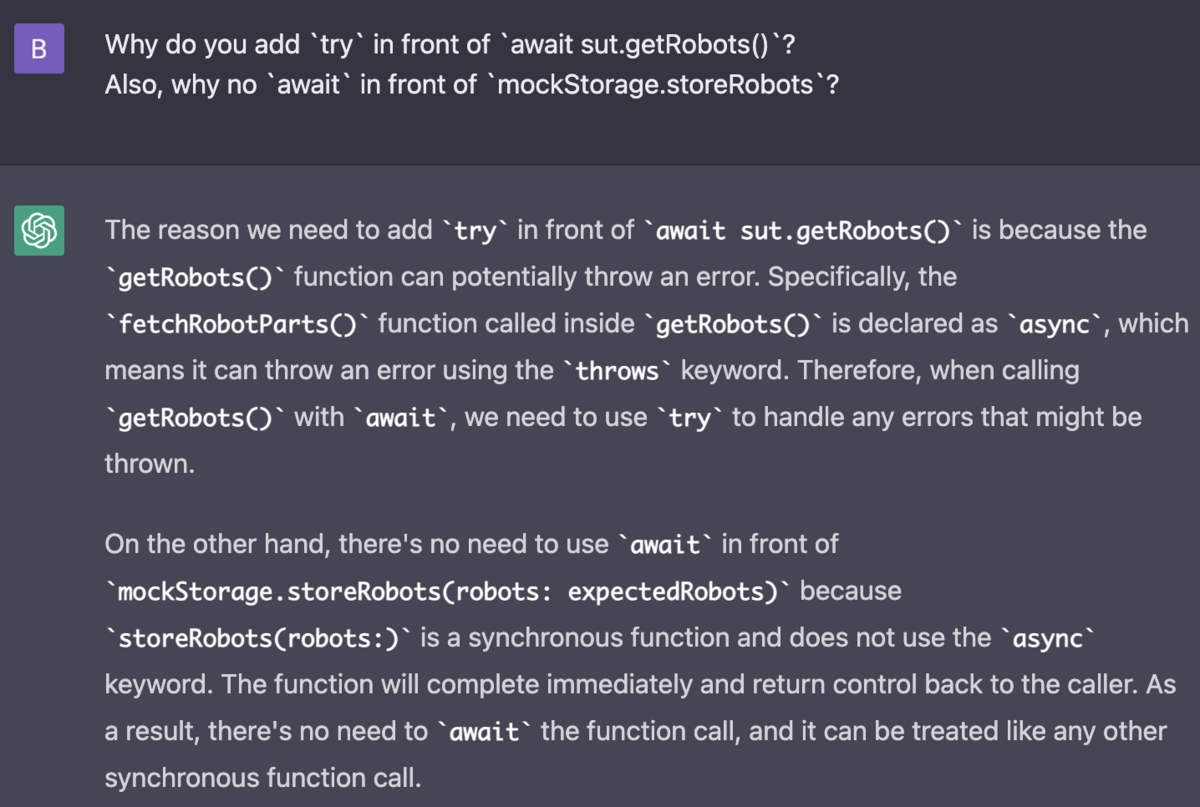

It seems like (relatively) new Swift framework called Concurrency makes ChatGPT struggle a little bit. Both mistakes it made are related to it:

storeRobotsis declared asasyncin theRobotsStorageprotocol. It was ignored.- When ChatGPT

awaits the results of thegetRobotscall, it adds thetrykeyword meaning that it expects that call to potentially throw an error. Even though that method's signature doesn't state that it is possible.

When I ask ChatGPT why it did so and later ask to fix the code, it starts to argue with me and is being clearly wrong. After talking to it for a while I couldn't convince ChatGPT that I was right and ended up fixing those mistakes myself (thankfully, that was really quick).

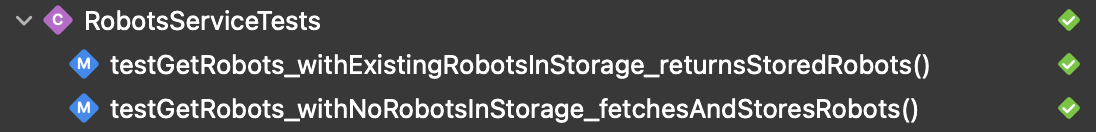

Here is what we have in the end. I asked ChatGPT to write more tests for other scenarios behind the scenes, but posting them all would make the article unreasonably long, so I am providing the initial "couple":

class RobotsServiceTests: XCTestCase {

var sut: RobotsService!

var mockAPI: MockRobotsAPI!

var mockStorage: MockRobotsStorage!

override func setUp() {

super.setUp()

mockAPI = MockRobotsAPI()

mockStorage = MockRobotsStorage()

sut = RobotsService(api: mockAPI, storage: mockStorage)

}

override func tearDown() {

mockAPI = nil

mockStorage = nil

sut = nil

super.tearDown()

}

func testGetRobots_withExistingRobotsInStorage_returnsStoredRobots() async throws {

// Arrange

let expectedRobots = [Robot(head: RobotHead(name: "Head1"), body: RobotBody(name: "Body1")),

Robot(head: RobotHead(name: "Head2"), body: RobotBody(name: "Body2"))]

await mockStorage.storeRobots(robots: expectedRobots)

// Act

let actualRobots = await sut.getRobots()

// Assert

XCTAssertEqual(actualRobots.map { $0.roboName }, expectedRobots.map { $0.roboName })

}

func testGetRobots_withNoRobotsInStorage_fetchesAndStoresRobots() async throws {

// Arrange

let expectedRobots = [Robot(head: RobotHead(name: "Head1"), body: RobotBody(name: "Body1")),

Robot(head: RobotHead(name: "Head2"), body: RobotBody(name: "Body2"))]

// Act

let actualRobots = await sut.getRobots()

// Assert

XCTAssertEqual(actualRobots.map { $0.roboName }, expectedRobots.map { $0.roboName })

let storedRobots = await mockStorage.fetchRobots()

XCTAssertEqual(storedRobots.map { $0.roboName }, expectedRobots.map { $0.roboName })

}

}

class MockRobotsAPI: RobotsAPI {

func fetchRobotParts() async -> RobotParts {

// Return some test RobotParts object

return RobotParts(heads: [RobotHead(name: "Head1"), RobotHead(name: "Head2")],

bodies: [RobotBody(name: "Body1"), RobotBody(name: "Body2")])

}

}

class MockRobotsStorage: RobotsStorage {

private var robots: [Robot]?

func storeRobots(robots: [Robot]) async {

self.robots = robots

}

func fetchRobots() async -> [Robot] {

return robots ?? []

}

}

And with a little bit of tweaking, we have some working tests:

Conclusion

Overall, ChatGPT indeed looks promising in that it can take on the most tedious tasks we have to deal with in our job (like unit tests). However, it is important to only rely on it when the cost of a mistake is not too high (like in the case of unit tests). To do its job well, ChatGPT needs the most simple context possible provided to it. As iOS developers, we have several tools to simplify that context and abstract parts of our code base away from each other. Among them are Protocol-Oriented Programming and Dependency Injection that we took a look at today. I hope you found this article useful. Thank you for reading!

UniFa is actively recruiting. If you're interested, please check our website for more details: unifa-e.com