Feature Extraction SIFT (Scale Invariant Feature Transform)

Why am I making this post?

Well, I basically needed to make my own SIFT algorithm as there is no free one in OpenCV anymore, well at least 3.0+.

For computer vision, one of the most basic ideas is to extract information from an image. This is feature extraction. There are different levels of features mainly global and local features. This blog will look at SIFT which is a local feature extractor. This is done by finding key points or areas of great change then adds quantitative information or descriptors that can then be used in a more complex task like object detection. Ideally, these key points should be able to be uniquely identified in various images regardless of transformations or changes in the image.

Why Python?

Yes, it's not the best in speed for this, and after running the code it takes a hot minute for it to do the feature extraction. But, I can easily use it in any computer vision project that I have now and it plugs and play no problem.

How does SIFT work?

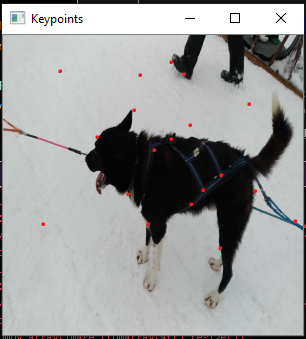

First, you give SIFT a picture to work with, we will be using an image I took of a dog from when I went dog sledding in Finland.

Step1: Double the size of your image both using bilinear interpolation.

Step 2: Blur the image using Gaussian Convolution.

Step 3: Preform more convolutions using Standard Deviation.

Step 4: Downsample each image.

Step 5: Restart the convolution again.

Continue this until the image is too small to perform these steps anymore.

This is called a scale-space which will help simulate many different scales that an image can come in (i.e. from small to larger and everything in between).

After the convolution, we will have to get the Laplacian for each scale space. This gives a grey scale value for each element in the image. The max values for the Laplacian will then be our key points. The max pixel will be a pixel whose value is larger than all its surrounding other pixels. This can be extended to several pixels or even a larger area depending on your needs. We can refine the key point results in the scale space by using a Quadratic Taylor expansion. Along with this, the identification of key points that lie on the edge of an object should also be removed as they are poor key points as they are not unique to translations of an image and are parallel to the edge direction. The point of keypoint extractions is not to find an edge of an object, but rather to find unique features of the image which may or may not lie on the edge of a target. That is the difference between edge detection and key point detection.

Finally, SIFT will give a reference orientation to each key point. Then the gradient of the image will be calculated by finite differences. Then smooth the gradients of the image using box blurs. This will allow for the remaining points that exceed a certain value/threshold to be kept. Another key point will be discarded.

After all of that, we have a list of final key points that we can create the descriptors for. This is done by creating a histogram of the gradient directions for each key point. This will not just make one histogram as it will make several around in a circle of the pixel where the histogram corresponds to the center pixel. Each gradient is a circle shape (rather than a box as used previously), and any key point that cannot create a full circle will be discarded.

So, after all of this, we now are left with a set of key points that are local features that can help us identify unique objects in images. This can then be used on its own for simple computer vision tasks like object identification, image slicing, and binding, or even image similarity for search engines. So, SIFT can be very useful if you know how and why it works.

Code for SIFT

Here is the pseudo code for getting SIFT for key point detection.

Sift Code

Find_Key_Points(image):

Gaussian Smoothing (image)

Downsample the image

Make Gaussians Pyramids

Create Downsample Gaussians Pyramids

For each octave:

Start Extrema detection.

for each image sample at each scale:

find the gradient magnitude

find the orientation

Calculate each keypoints orientation

Calculate each keypoints descriptor

As you can see the key points found in this image is not perfect, but the implementation of SIFT is not very easy. Also, this image has a lot of noise in it so it may look like the algorithm did not work, but it is working fine, but the changes are on such a small level that it is difficult to even see it.

And for those who want to see here is the whole (all be it abridged) code

import numpy as np from scipy import signal from scipy import misc from scipy import ndimage from scipy.stats import multivariate_normal from numpy.linalg import norm import numpy.linalg def sift_generate_keypoints(imagename, threshold): original = ndimage.imread(imagename, flatten=True) s = 3 k = 2 ** (1.0 / s) # Standard deviations for Gaussian smoothing vector_1 = np.array([1.3, 1.6, 1.6 * k, 1.6 * (k ** 2), 1.6 * (k ** 3), 1.6 * (k ** 4)]) vector_2 = np.array([1.6 * (k ** 2), 1.6 * (k ** 3), 1.6 * (k ** 4), 1.6 * (k ** 5), 1.6 * (k ** 6), 1.6 * (k ** 7)]) ... vector_total = np.array([1.6, 1.6 * k, 1.6 * (k ** 2), 1.6 * (k ** 3), 1.6 * (k ** 4), 1.6 * (k ** 5), 1.6 * (k ** 6), 1.6 * (k ** 7), 1.6 * (k ** 8), 1.6 * (k ** 9), 1.6 * (k ** 10), 1.6 * (k ** 11)]) # Downsampling images doubled = misc.imresize(original, 200, 'bilinear').astype(int) normal = misc.imresize(doubled, 50, 'bilinear').astype(int) halved = misc.imresize(normal, 50, 'bilinear').astype(int) quartered = misc.imresize(halved, 50, 'bilinear').astype(int) # Initialize Gaussian pyramids pyramid_l_1 = np.zeros((doubled.shape[0], doubled.shape[1], 6)) pyramid_l_2 = np.zeros((normal.shape[0], normal.shape[1], 6)) pyramid_l_3 = np.zeros((halved.shape[0], halved.shape[1], 6)) pyramid_l_4 = np.zeros((quartered.shape[0], quartered.shape[1], 6)) # Gaussian pyramids for i in range(0, 6): pyramid_l_1[:,:,i] = ndimage.filters.gaussian_filter(doubled, kvec1[i]) ... pyramid_l_4[:,:,i] = misc.imresize(ndimage.filters.gaussian_filter(doubled, kvec4[i]), 1.0 / 8.0, 'bilinear') # Difference-of-Gaussians (DoG) pyramids dog_pyrd_l_1 = np.zeros((doubled.shape[0], doubled.shape[1], 5)) ... dog_pyrd_l_4 = np.zeros((quartered.shape[0], quartered.shape[1], 5)) # Construct DoG pyramids for i in range(0, 5): dog_pyrd_l_1[:,:,i] = pyrlvl1[:,:,i+1] - pyrlvl1[:,:,i] ... dog_pyrd_l_4[:,:,i] = pyrlvl4[:,:,i+1] - pyrlvl4[:,:,i] # extrema locations pyramids extrem_loc_l_1 = np.zeros((doubled.shape[0], doubled.shape[1], 3)) ... extrem_loc_l_4 = np.zeros((quartered.shape[0], quartered.shape[1], 3)) for i in range(1, 4): for j in range(80, doubled.shape[0] - 80): for k in range(80, doubled.shape[1] - 80): if np.absolute(dog_pyrd_l_1[j, k, i]) < threshold: continue maxbool = (dog_pyrd_l_1[j, k, i] > 0) minbool = (dog_pyrd_l_1[j, k, i] < 0) for di in range(-1, 2): for dj in range(-1, 2): for dk in range(-1, 2): if di == 0 and dj == 0 and dk == 0: continue maxbool = maxbool and (dog_pyrd_l_1[j, k, i] > dog_pyrd_l_1[j + dj, k + dk, i + di]) minbool = minbool and (dog_pyrd_l_1[j, k, i] < dog_pyrd_l_1[j + dj, k + dk, i + di]) if not maxbool and not minbool: break if not maxbool and not minbool: break if not maxbool and not minbool: break if maxbool or minbool: dx = (dog_pyrd_l_1[j, k+1, i] - dog_pyrd_l_1[j, k-1, i]) * 0.5 / 255 dy = (dog_pyrd_l_1[j+1, k, i] - dog_pyrd_l_1[j-1, k, i]) * 0.5 / 255 ds = (dog_pyrd_l_1[j, k, i+1] - dog_pyrd_l_1[j, k, i-1]) * 0.5 / 255 dxx = (dog_pyrd_l_1[j, k+1, i] + dog_pyrd_l_1[j, k-1, i] - 2 * dog_pyrd_l_1[j, k, i]) * 1.0 / 255 dyy = (dog_pyrd_l_1[j+1, k, i] + dog_pyrd_l_1[j-1, k, i] - 2 * dog_pyrd_l_1[j, k, i]) * 1.0 / 255 dss = (dog_pyrd_l_1[j, k, i+1] + dog_pyrd_l_1[j, k, i-1] - 2 * dog_pyrd_l_1[j, k, i]) * 1.0 / 255 dxy = (dog_pyrd_l_1[j+1, k+1, i] - dog_pyrd_l_1[j+1, k-1, i] - dog_pyrd_l_1[j-1, k+1, i] + dog_pyrd_l_1[j-1, k-1, i]) * 0.25 / 255 dxs = (dog_pyrd_l_1[j, k+1, i+1] - dog_pyrd_l_1[j, k-1, i+1] - dog_pyrd_l_1[j, k+1, i-1] + dog_pyrd_l_1[j, k-1, i-1]) * 0.25 / 255 dys = (dog_pyrd_l_1[j+1, k, i+1] - dog_pyrd_l_1[j-1, k, i+1] - dog_pyrd_l_1[j+1, k, i-1] + dog_pyrd_l_1[j-1, k, i-1]) * 0.25 / 255 dD = np.matrix([[dx], [dy], [ds]]) H = np.matrix([[dxx, dxy, dxs], [dxy, dyy, dys], [dxs, dys, dss]]) x_hat = numpy.linalg.lstsq(H, dD)[0] D_x_hat = dog_pyrd_l_1[j, k, i] + 0.5 * np.dot(dD.transpose(), x_hat) r = 10.0 if ((((dxx + dyy) ** 2) * r) < (dxx * dyy - (dxy ** 2)) * (((r + 1) ** 2))) and (np.absolute(x_hat[0]) < 0.5) and (np.absolute(x_hat[1]) < 0.5) and (np.absolute(x_hat[2]) < 0.5) and (np.absolute(D_x_hat) > 0.03): extrem_loc_l_1[j, k, i - 1] = 1 #........ # Repeat for each octave # Gradient magnitude and orientation grad_mag_ori_l_1 = np.zeros((doubled.shape[0], doubled.shape[1], 3)) ... grad_mag_ori_l_4 = np.zeros((quartered.shape[0], quartered.shape[1], 3)) ori_pyramid_l_1 = np.zeros((doubled.shape[0], doubled.shape[1], 3)) ... ori_pyramid_l_4 = np.zeros((quartered.shape[0], quartered.shape[1], 3)) for i in range(0, 3): for j in range(1, doubled.shape[0] - 1): for k in range(1, doubled.shape[1] - 1): grad_mag_ori_l_1[j, k, i] = ( ((doubled[j+1, k] - doubled[j-1, k]) ** 2) + ((doubled[j, k+1] - doubled[j, k-1]) ** 2) ) ** 0.5 ori_pyramid_l_1[j, k, i] = (36 / (2 * np.pi)) * (np.pi + np.arctan2((doubled[j, k+1] - doubled[j, k-1]), (doubled[j+1, k] - doubled[j-1, k]))) # Repeat for each orientation pyramid extr_sum = int(np.sum(extrem_loc_l_1) + np.sum(extrem_loc_l_2) + np.sum(extrem_loc_l_3) + np.sum(extrem_loc_l_4)) keypoints = np.zeros((extr_sum, 4)) #Key point calculation count = 0 for i in range(0, 3): for j in range(80, doubled.shape[0] - 80): for k in range(80, doubled.shape[1] - 80): if extrem_loc_l_1[j, k, i] == 1: gaussian_window = multivariate_normal(mean=[j, k], cov=((1.5 * vector_total[i]) ** 2)) two_sd = np.floor(2 * 1.5 * vector_total[i]) orient_hist = np.zeros([36,1]) for x in range(int(-1 * two_sd * 2), int(two_sd * 2) + 1): ylim = int((((two_sd * 2) ** 2) - (np.absolute(x) ** 2)) ** 0.5) for y in range(-1 * ylim, ylim + 1): if j + x < 0 or j + x > doubled.shape[0] - 1 or k + y < 0 or k + y > doubled.shape[1] - 1: continue weight = grad_mag_ori_l_1[j + x, k + y, i] * gaussian_window.pdf([j + x, k + y]) bin_idx = np.clip(np.floor(ori_pyramid_l_1[j + x, k + y, i]), 0, 35) orient_hist[int(np.floor(bin_idx))] += weight maxval = np.amax(orient_hist) maxidx = np.argmax(orient_hist) keypoints[count, :] = np.array([int(j * 0.5), int(k * 0.5), vector_total[i], maxidx]) count += 1 orient_hist[maxidx] = 0 newmaxval = np.amax(orient_hist) while newmaxval > 0.8 * maxval: newmaxidx = np.argmax(orient_hist) np.append(keypoints, np.array([[int(j * 0.5), int(k * 0.5), vector_total[i], newmaxidx]]), axis=0) orient_hist[newmaxidx] = 0 newmaxval = np.amax(orient_hist) # Repeat for each octave # Create descriptors magnit_py = np.zeros((normal.shape[0], normal.shape[1], 12)) orient_py = np.zeros((normal.shape[0], normal.shape[1], 12)) for i in range(0, 3): magmax = np.amax(grad_mag_ori_l_1[:, :, i]) magnit_py[:, :, i] = misc.imresize(grad_mag_ori_l_1[:, :, i], (normal.shape[0], normal.shape[1]), "bilinear").astype(float) magnit_py[:, :, i] = (magmax / np.amax(magnit_py[:, :, i])) * magnit_py[:, :, i] orient_py[:, :, i] = misc.imresize(ori_pyramid_l_1[:, :, i], (normal.shape[0], normal.shape[1]), "bilinear").astype(int) orient_py[:, :, i] = ((36.0 / np.amax(orient_py[:, :, i])) * orient_py[:, :, i]).astype(int) for i in range(0, 3): magnit_py[:, :, i+3] = (magpyrlvl2[:, :, i]).astype(float) orient_py[:, :, i+3] = (oripyrlvl2[:, :, i]).astype(int) for i in range(0, 3): magnit_py[:, :, i+6] = misc.imresize(magpyrlvl3[:, :, i], (normal.shape[0], normal.shape[1]), "bilinear").astype(int) orient_py[:, :, i+6] = misc.imresize(oripyrlvl3[:, :, i], (normal.shape[0], normal.shape[1]), "bilinear").astype(int) for i in range(0, 3): magnit_py[:, :, i+9] = misc.imresize(grad_mag_ori_l_4[:, :, i], (normal.shape[0], normal.shape[1]), "bilinear").astype(int) orient_py[:, :, i+9] = misc.imresize(ori_pyramid_l_4[:, :, i], (normal.shape[0], normal.shape[1]), "bilinear").astype(int) descriptors = np.zeros([keypoints.shape[0], 128]) for i in range(0, keypoints.shape[0]): for x in range(-8, 8): for y in range(-8, 8): theta = 10 * keypoints[i,3] * np.pi / 180.0 xrot = np.round((np.cos(theta) * x) - (np.sin(theta) * y)) yrot = np.round((np.sin(theta) * x) + (np.cos(theta) * y)) scale_idx = np.argwhere(vector_total == keypoints[i,2])[0][0] x0 = keypoints[i,0] y0 = keypoints[i,1] gaussian_window = multivariate_normal(mean=[x0,y0], cov=8) weight = magnit_py[int(x0 + xrot), int(y0 + yrot), scale_idx] * gaussian_window.pdf([x0 + xrot, y0 + yrot]) angle = orient_py[int(x0 + xrot), int(y0 + yrot), scale_idx] - keypoints[i,3] if angle < 0: angle = 36 + angle bin_idx = np.clip(np.floor((8.0 / 36) * angle), 0, 7).astype(int) descriptors[i, 32 * int((x + 8)/4) + 8 * int((y + 8)/4) + bin_idx] += weight descriptors[i, :] = descriptors[i, :] / norm(descriptors[i, :]) descriptors[i, :] = np.clip(descriptors[i, :], 0, 0.2) descriptors[i, :] = descriptors[i, :] / norm(descriptors[i, :]) return [keypoints, descriptors]

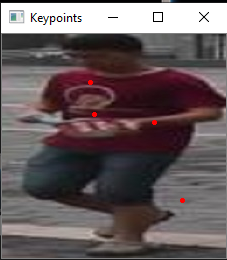

Now for a more real-world example. Getting data from the Market1501 data set that holds several images of people for different tasks. By running one of the images through the key point extractor, it allows for you to find the local features that are unique to the image itself.

Conclusion

This implementation is not the state of the art method for getting key points and is very susceptible to blurry or poor image quality, as well as background noise and noise in the image that you cannot detect yourself. This took a long while to implement and the results are not that great compared to the time it took to create this. In my opinion, I would look into other means of extracting local features like the segmentation of the image.

Reference

Otero, I. R., & Delbracio, M. (2014). Anatomy of the SIFT Method. Image Processing On Line,4, 370-396. doi:10.5201/ipol.2014.82

Market data set retrived from here:

https://jingdongwang2017.github.io/Projects/ReID/Datasets/Market-1501.html