By Matthew Millar R&D Scientist at ユニファ

Purpose:

This blog is to show the development process of a new research paper that I am working on.

The goal of this string of blog posts is to slowly but surely develop a product that can aid in the data attribute labeling for humans and even other types of image data.

This can be used in several products from people identification, tracking, and statistical data analysis.

Are you ready? Try to keep up!

Attribute Recognition:

What is Attribute Recognition? It is the process of identifying what properties are present in an image. This is normally done on humans but can be done on pretty much anything from cities, cars, and even airplanes. The ability to predict the presence or absence of an item can be very beneficial. Tracking people, a safety check of a vehicle (like a bus or a plane) before departure, visual inspection of an assembled computer, even uses in nuclear power plants. A simple scan of an image can yield some very important warning which could be detected before a disaster can occur.

Data set:

The data-set that I will be using will be the Market-1501 data-set (Zheng et al., 2015) which is commonly used for the Re-identification problems. Why use this data-set? I am using this data-set because of the size and variety of people in the images. The image quality is akin to that of a standard security camera. There are varied backgrounds for each image which will only make the program stronger at generalization by avoiding the use of a cleaned, non-noisy data-set. This data-set will give us many attributes to extract over the next few weeks.

Step 1 Battle of the Sexes:

The first and possibly easiest attribute to check is the gender of a person. This will be easy as it can be a binary classification problem, so not that big of a deal. If your reading this then more likely than not have read a Dog and Cat classification post somewhere when you started out learning CNNs. The model that we will build will be similar so I will not go into great detail of the model itself.

Pre-process Steps:

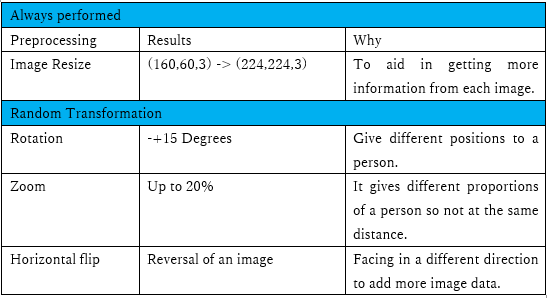

The first step we need to take is the pre-processing of the images. First, we need to separate the images into the two classes (male, female). These will be our classes for training. Then we need to split the data-set into training and testing sets.

I will use Keras’s image generator to do this as it will not only save time, but I can do all the other pre-processing steps at the same time. This is a list of all possible random image augmentations that will be performed on each image along with some pre-processing steps that will always be performed.

Here is the code for the generator for both training and validation data-set. By defining the image generators like this, it saved time splitting up the data-set yourself or having to load it into memory directly and use another python library to do the splitting.

train_datagen = ImageDataGenerator(rescale=1./255, shear_range=0.2, rotation_range=15, zoom_range=0.2, horizontal_flip=True, validation_split=0.2) # set validation split train_generator = train_datagen.flow_from_directory( DATA_PATH, target_size=(224, 224), batch_size=BATCH_SIZE, class_mode='binary', subset='training') # set as training data validation_generator = train_datagen.flow_from_directory( DATA_PATH, # same directory as training data target_size=(224, 224), batch_size=BATCH_SIZE, class_mode='binary', subset='validation') # set as validation data

Now with that defined we can then use this in training the model. The model will be a simple binary classification model. There is no real need to make it too complex as this is just one of many models that will be used in the product.

def build(): model = Sequential() model.add(Conv2D(32, (3, 3), input_shape=(224, 224, 3))) model.add(Activation('relu')) model.add(Dropout(0.3)) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Conv2D(64, (3, 3))) model.add(Conv2D(64, (3, 3))) model.add(Activation('relu')) model.add(Dropout(0.3)) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Conv2D(128, (3, 3))) model.add(Conv2D(128, (3, 3))) model.add(Activation('relu')) model.add(Dropout(0.3)) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Flatten()) model.add(Dense(128)) model.add(Dense(128)) model.add(Activation('relu')) model.add(Dropout(0.5)) model.add(Dense(1)) model.add(Activation('sigmoid')) return model

Seeing that this is a binary problem the use sigmoid is an appropriate activation layer here. The model is not that deep as it is only 2 fully connected layers and one fully connected output layer.

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d_1 (Conv2D) (None, 222, 222, 32) 896 _________________________________________________________________ activation_1 (Activation) (None, 222, 222, 32) 0 _________________________________________________________________ dropout_1 (Dropout) (None, 222, 222, 32) 0 _________________________________________________________________ max_pooling2d_1 (MaxPooling2 (None, 111, 111, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 109, 109, 64) 18496 _________________________________________________________________ conv2d_3 (Conv2D) (None, 107, 107, 64) 36928 _________________________________________________________________ activation_2 (Activation) (None, 107, 107, 64) 0 _________________________________________________________________ dropout_2 (Dropout) (None, 107, 107, 64) 0 _________________________________________________________________ max_pooling2d_2 (MaxPooling2 (None, 53, 53, 64) 0 _________________________________________________________________ conv2d_4 (Conv2D) (None, 51, 51, 128) 73856 _________________________________________________________________ conv2d_5 (Conv2D) (None, 49, 49, 128) 147584 _________________________________________________________________ activation_3 (Activation) (None, 49, 49, 128) 0 _________________________________________________________________ dropout_3 (Dropout) (None, 49, 49, 128) 0 _________________________________________________________________ max_pooling2d_3 (MaxPooling2 (None, 24, 24, 128) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 73728) 0 _________________________________________________________________ dense_1 (Dense) (None, 128) 9437312 _________________________________________________________________ dense_2 (Dense) (None, 128) 16512 _________________________________________________________________ activation_4 (Activation) (None, 128) 0 _________________________________________________________________ dropout_4 (Dropout) (None, 128) 0 _________________________________________________________________ dense_3 (Dense) (None, 1) 129 _________________________________________________________________ activation_5 (Activation) (None, 1) 0 ================================================================= Total params: 9,731,713 Trainable params: 9,731,713 Non-trainable params: 0 __________________________________________

Now with the model defined we will turn our attention to training the model.

opt = SGD(lr=LR, momentum=0.9, decay=LR / EPOCHS) model = build(224, 224, 1) model.compile(loss="binary_crossentropy", optimizer=opt,metrics=["accuracy"]) filepath= "GenderID-{epoch:02d}-{val_acc:.4f}.ckpt" checkpoint = ModelCheckpoint(filepath, monitor='val_acc', verbose=1, save_best_only=True, mode='max', save_weights_only=False) callbacks_list = [checkpoint] model.fit_generator( train_generator, steps_per_epoch = train_generator.samples // BATCH_SIZE, validation_data = validation_generator, validation_steps = validation_generator.samples // BATCH_SIZE, epochs = EPOCHS, verbose=1, callbacks=callbacks_list)

As you can see the model started to produce pretty good results (~80% validation accuracy) after training.

Epoch 1/10 322/322 [==============================] - 139s 432ms/step - loss: 0.6444 - acc: 0.6356 - val_loss: 0.6126 - val_acc: 0.7211 Epoch 00001: val_acc improved from -inf to 0.72109, saving model to GenderID-01-0.7211.ckpt Epoch 2/10 322/322 [==============================] - 128s 398ms/step - loss: 0.5833 - acc: 0.6987 - val_loss: 0.5848 - val_acc: 0.7490 Epoch 00002: val_acc improved from 0.72109 to 0.74902, saving model to GenderID-02-0.7490.ckpt Epoch 3/10 322/322 [==============================] - 128s 399ms/step - loss: 0.5459 - acc: 0.7334 - val_loss: 0.5795 - val_acc: 0.7565 Epoch 00003: val_acc improved from 0.74902 to 0.75647, saving model to GenderID-03-0.7565.ckpt Epoch 4/10 322/322 [==============================] - 125s 388ms/step - loss: 0.5208 - acc: 0.7462 - val_loss: 0.5736 - val_acc: 0.7137 Epoch 00004: val_acc did not improve from 0.75647 Epoch 5/10 322/322 [==============================] - 125s 390ms/step - loss: 0.4986 - acc: 0.7637 - val_loss: 0.5472 - val_acc: 0.7212 Epoch 00005: val_acc did not improve from 0.75647 Epoch 6/10 322/322 [==============================] - 124s 384ms/step - loss: 0.4912 - acc: 0.7667 - val_loss: 0.5136 - val_acc: 0.7851 Epoch 00006: val_acc improved from 0.75647 to 0.78510, saving model to GenderID-06-0.7851.ckpt Epoch 7/10 322/322 [==============================] - 124s 384ms/step - loss: 0.4674 - acc: 0.7799 - val_loss: 0.5209 - val_acc: 0.7745 Epoch 00007: val_acc did not improve from 0.78510 Epoch 8/10 322/322 [==============================] - 124s 385ms/step - loss: 0.4485 - acc: 0.7925 - val_loss: 0.4978 - val_acc: 0.7643 Epoch 00008: val_acc did not improve from 0.78510 Epoch 9/10 322/322 [==============================] - 123s 381ms/step - loss: 0.4323 - acc: 0.8022 - val_loss: 0.5000 - val_acc: 0.7737 Epoch 00009: val_acc did not improve from 0.78510 Epoch 10/10 322/322 [==============================] - 124s 386ms/step - loss: 0.4277 - acc: 0.8037 - val_loss: 0.5061 - val_acc: 0.7565 Epoch 00010: val_acc did not improve from 0.78510

Testing

Testing on some images of both male and female the model did as expected ok.

For men, the accuracy was 65.17 % correct.

And for women, the accuracy was 48.36 % correct

So the model is a little more accurate for detecting men than women in the end.

With a total accuracy of 58.36% which is ok a little better than guessing randomly so I will take that as a win.

CONCLUSION:

Now we can see the model is accurate for this complex problem. But how can we improve this model? Some improvements can be done by using a pre-train model to aid in the feature extraction of an image along with better data augmentation techniques.

The model can successfully predict if a person in an image is a man or a woman without the use of faces which is a very difficult task. Why is this important? This will allow for telling if someone sex from a distance even if their face is obscured by clothing or a jacket. So you can use lower resolution security cameras and still with a certain accuracy tell if the person is a man or a woman.

Future Improvement:

From here I will add in layer initializers, deepen the network, add in a pre-trained fine turned model, and improve the data augmentation for the model. This should give a little better results and possibly reaching my goal of 65% which would be a very good model for this particular task.

References:

L. Zheng, L. Shen, L. Tian, S. Wang, J. Wang and Q. Tian, "Scalable Person Re-identification: A Benchmark," 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, 2015, pp. 1116-1124.

doi: 10.1109/ICCV.2015.133