By Matthew Millar R&D Scientist at ユニファ

Purpose:

This is part III of the MAG (Multi-Model Attribute Generator) paper I am working on. This post will focus on defining what clothing the upper half of a person is wearing. As with the last post, this will not look at color right now as that will follow later. This model will allow for the classification of two different clothing types; long sleeves, and short sleeves. This model will conclude the classification of the clothing that is being worn by a person.

PART I: Multi-Model Attribute Generator - ユニファ開発者ブログ

PART II: MAG part II - ユニファ開発者ブログ

Lessons Learned:

Looking at the failures and successes of the Lower body discriminator, we can apply this knowledge to make a better model quicker and more accurate.

One issue of the last post was the difficulty of knowing if a person is wearing shorts or pants. There were a lot of miss classifications due to the data sorting criteria that I used. To fix this issue, a "hard choice" criterion was made to limit this issue in the upper body model. Now, if any skin is shown, it will be considered a short sleeve shirt. However, this is not ideal as some people will roll up their sleeves which did exist in the new dataset. These cases were discarded as it would cause issues in the model's training. This might be a problem as they are truly wearing long sleeve shirts. However, due to the selection/sorting criteria, they are wearing short sleeve shirts and thus could not be included in the long sleeve shirt dataset. By doing this, it will limit the misclassification that occurred due to the high-water pants and long shorts issue in the previous post. This is an acceptable approach because using this model as a ReID attribute generator, rolled up sleeve can be considered short sleeves due to the nature of its position on the arm. Thus passing the logical "Duck Test".

If it looks like a duck, swims like a duck, and quacks like a duck, then it probably is a duck.

Data Change:

Ok so for long and short sleeves, the Market1501 dataset is not sufficient as there are no long sleeve shirts in the dataset. So, I had to bring in another ReID dataset called CUHK01 (Li et.al., 2014). This is a very similar dataset and is often used in the same study as an alternative or extra data. This makes the use of this dataset for long sleeves an acceptable alternative/addition to the Market1501 dataset.

Long or Short another Binary Heads or Tails:

Now that this is another binary problem, it will be very similar to the gender classifier we built in part 1 (See above link). To make this simple we will just borrow the new version of the Gender Classification model. This way we know it should work well because for this as long and short sleeves are easier to tell apart than gender.

def build(): img = Input(shape=(224,224,3)) # Get the base of the image x = base_extractor(img) print(x.shape) x = Dense(2048, activation='relu')(x) x = Dense(2048, activation='relu')(x) x = Dropout(0.3)(x) x = BatchNormalization()(x) x = Dense(2048, activation='relu')(x) x = Dense(2048, activation='relu')(x) x = Dropout(0.3)(x) x = BatchNormalization()(x) pred = Dense(1, activation='sigmoid')(x) return Model(inputs = img, outputs = pred)

The data set up will be much the same as the lower body classifier as we are only interested in the upper portion of the body. We can use a slightly modified image cropper from the lower body model.

def crop_upper_part(img): y,x,_ = img.shape startx = 0 starty = 0 return img[starty:y//2,startx:startx+x] def crop_generator(batches): while True: batch_x, batch_y = next(batches) batch_crops = np.zeros((batch_x.shape[0], 112, 224, 3)) for i in range(batch_x.shape[0]): batch_crops[i] = crop_upper_part(batch_x[i]) yield (batch_crops, batch_y)

The rest of the model follows a typical set up. So, I will not go into it but will just leave it here for you if you want it.

EPOCHS = 10 LR = 0.001 opt = SGD(lr=LR, momentum=0.9, decay=LR / EPOCHS) model = build() model.compile(loss="binary_crossentropy", optimizer=opt, metrics=["accuracy"]) filepath= "LongShort-{epoch:02d}-{val_accuracy:.4f}.h5" checkpoint = ModelCheckpoint(filepath, monitor='val_accuracy', verbose=1, save_best_only=True, mode='max', save_weights_only=False) reduce_lr = ReduceLROnPlateau(monitor='val_loss', factor=0.2, patience=1, min_lr=0.0001) callbacks_list = [checkpoint,reduce_lr] history = model.fit_generator( train_generator, steps_per_epoch = train_generator.samples // BATCH_SIZE, validation_data = validation_generator, validation_steps = validation_generator.samples // BATCH_SIZE, epochs = EPOCHS, verbose=1, callbacks=callbacks_list)

Results:

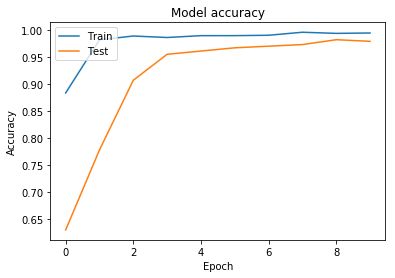

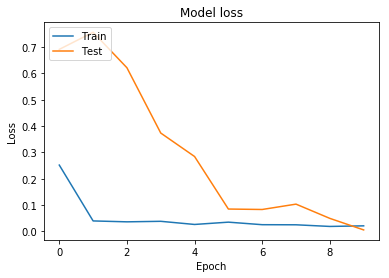

The results are very good. Looking at the accuracy is very good with a score and accuracy for evaluation of

[0.00686639454215765, 0.9836065769195557]

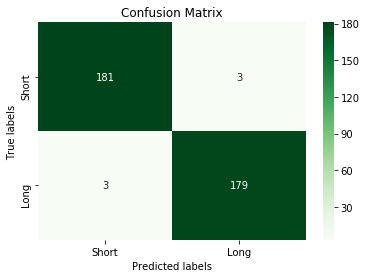

Looking at the confusion matrix, the model performs up to par and then some.

Confusion Matrix

[[181 3]

[ 3 179]]

Classification Report

precision recall f1-score support

Short 0.98 0.98 0.98 184

Long 0.98 0.98 0.98 182

accuracy 0.98 366

macro avg 0.98 0.98 0.98 366

weighted avg 0.98 0.98 0.98 366

Conclusion:

So, this model has performed stellar. Why is that, well from the lessons I have learned as I was building out the other two models. I used what worked and discarded what didn’t.

By using a finetuned pre-trained model, the accuracy automatically gets a boost for this dataset. By cropping the image to only what I want the model to learn has greatly increased the accuracy. And keeping the preprocessing of each image the same and the fine-tuned model in every single other model makes sure there are not issues in data preparation. This also future proofs these models so I know that they will work together in the final product.

Join me next time when we will start on the color of the upper body.

References:

W. Li, R. Zhao, T. Xiao and X. Wang, "DeepReID: Deep Filter Pairing Neural Network for Person Re-identification," 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, 2014, pp. 152-159.

doi: 10.1109/CVPR.2014.27

Image from https://www.pexels.com/search/duck/